PLANNAR: Co-Visioning with AR

Summary

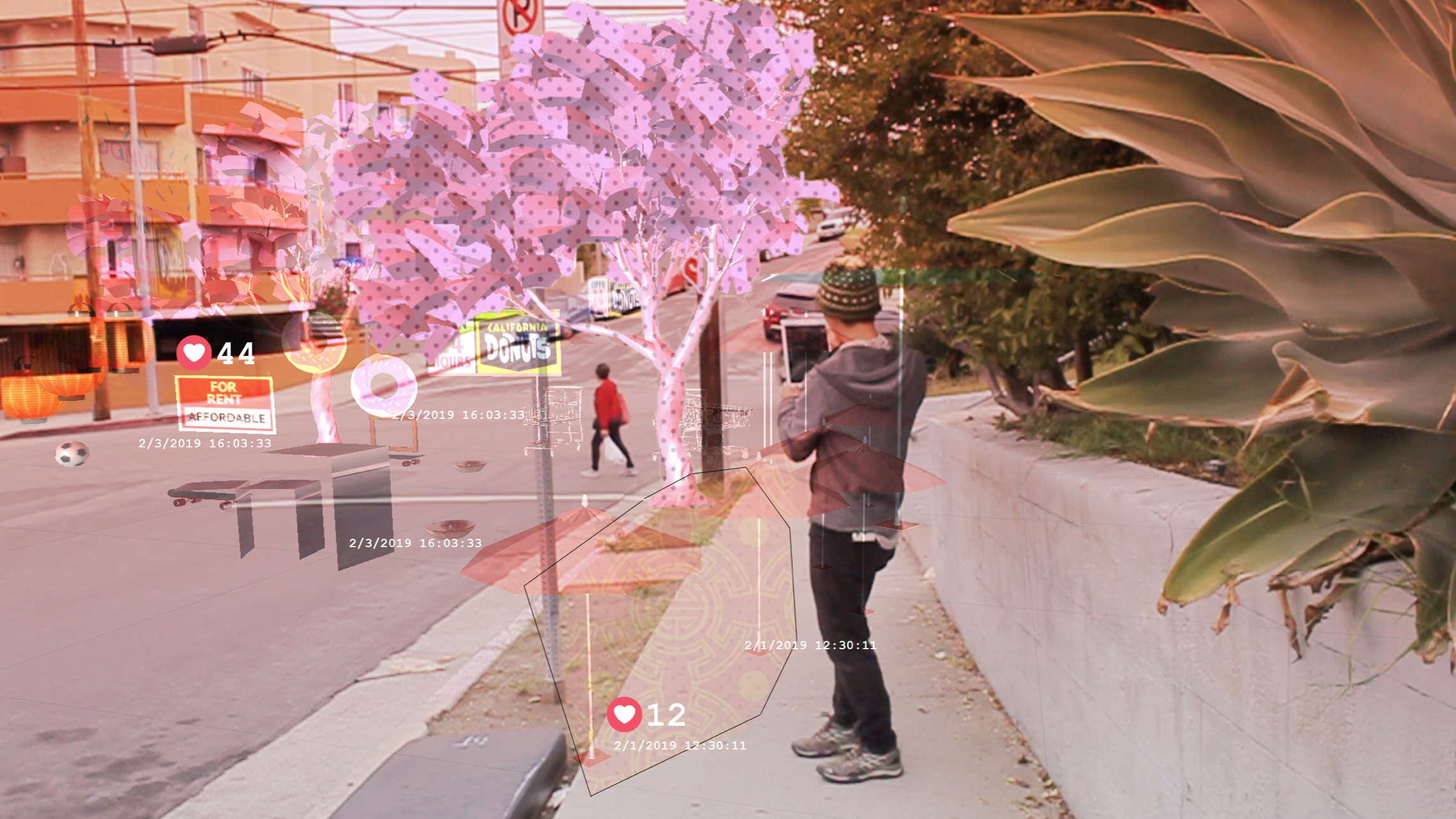

PLANNAR is a mobile AR approach to cultivating a generative urbanism, mobilizing long-term residents of Chinatown, Los Angeles to participate in the visioning of their neighborhood through the placement and “hearting” of literal and symbolic virtual objects around the neighborhood.

PLANNAR is a mobile AR approach to cultivating a generative urbanism, mobilizing long-term residents of Chinatown, Los Angeles to participate in the visioning of their neighborhood through the placement and “hearting” of literal and symbolic virtual objects around the neighborhood.

Role

Conceptual Design, Research, UI/UX, Visual Design, Coded Prototyping, 3D Assets, Motion Design, Video Editing, Community Outreach

Conceptual Design, Research, UI/UX, Visual Design, Coded Prototyping, 3D Assets, Motion Design, Video Editing, Community Outreach

Tools

Unity 3D, Rhino 3D, After Effects, Photoshop, Illustrator, Sketch

Unity 3D, Rhino 3D, After Effects, Photoshop, Illustrator, Sketch

Outcomes

PLANNAR was a 6-month long thesis project I completed at ArtCenter College of Design October 2018-April 2019. In addition to deploying and working locally within my neighborhood in Chinatown, I have been fortunate enough to share PLANNAR at:

Primer Conference 2019 at Parsons School of Design (Emerging Designer Exhibition)

Glendale Tech Week (Pop-up Exhibit)

Gensler-LA Office (to an internal AR team)

Refactor Camp 2019 (Lightning Speaker)

Association of Professional Futurists FutureFest Conference 2019 (Speaker)

UCLA’s Masters Urban Planning Visual Communication class (Guest Lecturer)

Background

This project arises from my discontent with community engagement strategies typically used in master planning, where conventional visioning methods entail brief workshops with only a few residents and use their participation as a proxy for consensus. To counter this issue of misrepresentation, PLANNAR facilitates the scalable generation of many site-and time-specific ‘visions for the future,’ asserting a bottom-up model of urban planning. Rather than asking residents to show up at a workshop, PLANNAR meets residents where they are--walking around the neighborhood and using social media. In doing so, I propose that virtual space is new territory for meaningful public participation. Research + Signals

In forming this product, there were a few forces occuring in 2018-2019 that formed the basis of my assumptions and interest in pursuing this project:

Design Inquiry

![]()

- Paying attention to the rapid increase in development projects in my neighborhood (Chinatown)

- Following and getting involved with the work of grassroots organizations like LA Tenants Union, NOlympics, CCED, and Defend Boyle Heights, and Chinatown Sustainability Dialogue in Los Angeles, who were all critiquing the city’s response to rising gentrification and evictions

-

Market projections for mass adoption of Augmented Reality in the US pointing to a higher adoption rate of mobile AR (as opposed to HMDs or VR)

-

General public sentiment of frustration and unsatisfaction with the current community engagement process for neighborhood development

- Lack of engagement tools in the AR space specifically designed for this use case

Design Inquiry

Problem Statement

How might mobile AR serve as a new tool for engaging residents in the urban development process?

Marking the dimensions and space for AR objects to exist in the urban realm

From there, more questions arose:

- What are the affordances of AR?

- What are the implications of using this technology in a civic context?

- For whom is this product ultimately for?

- What are the constraints and limitations of this technology in communicating desires or cultural expression?

- How would this new co-visioning system work and change the existing process?

- Is AR an accessible medium for people who are not as digitally connected? What are some low-tech alternatives?

Content + UI Design

My goal was to create a very simple interface that even the least tech-literate resident could use: draggable icons that would place a 3D object in place. Having limited C# coding skills in Unity but needing a coded prototype I could begin testing for AR functionalities, I used Sawant Kenth’s ARFoundation Template Photo App made in Unity 3D to get started.

Captured Neighborhood Artifacts:

Using mobile photogrammetry apps, I went around the neighborhood capturing 3D scans of famous cultural objects, such as the Bruce Lee statue, Foo Dogs in front of most buildings, rocks that are part of many plazas in Chinatown. As interesting as this technology was, I did not continue down this route because of the labor required to create these photogrammetry captures. Having to have full 360 degree access in abundant light would seem inaccessible for most urban elements.

Trees:

During one of my interviews with residents, I learned that Chinatown has the worst air pollution in all of LA County. This gave me the idea to focus my next deployment on trees, as at this stage, I was mainly interested in understanding the physical implications and UX of placing simple objects. Additionally, the focus on trees would be a strategic move for an initial prototype because it:

- Was a universal element of our built environment

- Had differing cultural significance to a variety of ethnic groups

- Had differing utilitarian significance to a variety of residents (i.e. some would want a tree for shade if on their walking commute, some for beauty, some for literal picking of its fruits)

- Would be a realistic use case that would happen given that Chinatown has the worst air pollution and is an area with not that many trees (Mayor Garcetti had recently appointed a Chief Urban Forestry Officer)

Other than placing a tree in a desired area, how else might an AR interface afford residents new ways to express their preferences?

Early Experiments:

I built a quick prototype that had different kinds of trees one could place.Based on an anecdote from a resident, the following storyboard inspired some product features:

From this scenario, I thought about some more questions:

-

Where would she need to be do use this app?

Would need to be walking in a safe area to place a virtual tree (i.e. a sidewalk, a park) - When/how frequently would she use this app?

The use of the app in this case is triggered by a response to the weather, or a civically-minded attitude -

How is she using the app?

She’s taking a moment during her walking commute to quickly create a sketch and ‘share’ it - Why is she using the app?

She doesn’t know of other ways to ‘report’ a desire so she is using this platform to get others to rally behind it so it comes true. Motivated to make the neighborhood be designed according to her preferences.

If a lot of the experiences for residents placing objects centered around their human-scale walking journeys, there had to be more ways to break out of that 1:1 placing scale and make larger impact.

Controlling # of Trees, telling stories with trees: UI

Before moving into Unity to figure out how to make it work, I made some narrative prototypes in After Effects, imaginging how a resident could start to view their urban surroundings differently, and how the constraints of what could be placed would show. A slider with a minimum of ‘0 trees’ to a maximum value set by an urban planner would inform potential scenarios for tree placement. Here, the app would collect #of trees, and the resident would be able to see its impact visually from different viewpoints.

After Effects Prototype 1: Slider for increasing tree density at LA Historic Park from multiple view points

Other ideas centered more around the functionalities of adding

Growth Slider:

So residents can preview what the tree will look like as it grows (and give idea about how much shade it could provide)

Linear Distribution Slider:

So residents can fill a border or linear path on a sidewalk quickly without having to walk to much and place each tree individually

Exponential Distribution Slider:

So residents can create density quickly & express larger areas

Resident-Generated Tree-scapes:

Shifting Focus to AR Objects

After processing some feedback both from my thesis advisors and residents, I realized that my metric of success in this whole endeavor was whether people could really use the objects to express their own desires.

Without having AR objects that residents resonated with or wanted, the development of all the interesting user experiences that I could develop (such as building out the different preview features) would be a waste of energy, and I’d be building something that nobody wanted.

Having already invested time and into this build, one possible workaround was to implement rules around what the significance of the objects and how they would be used during the ‘co-visioning process.’

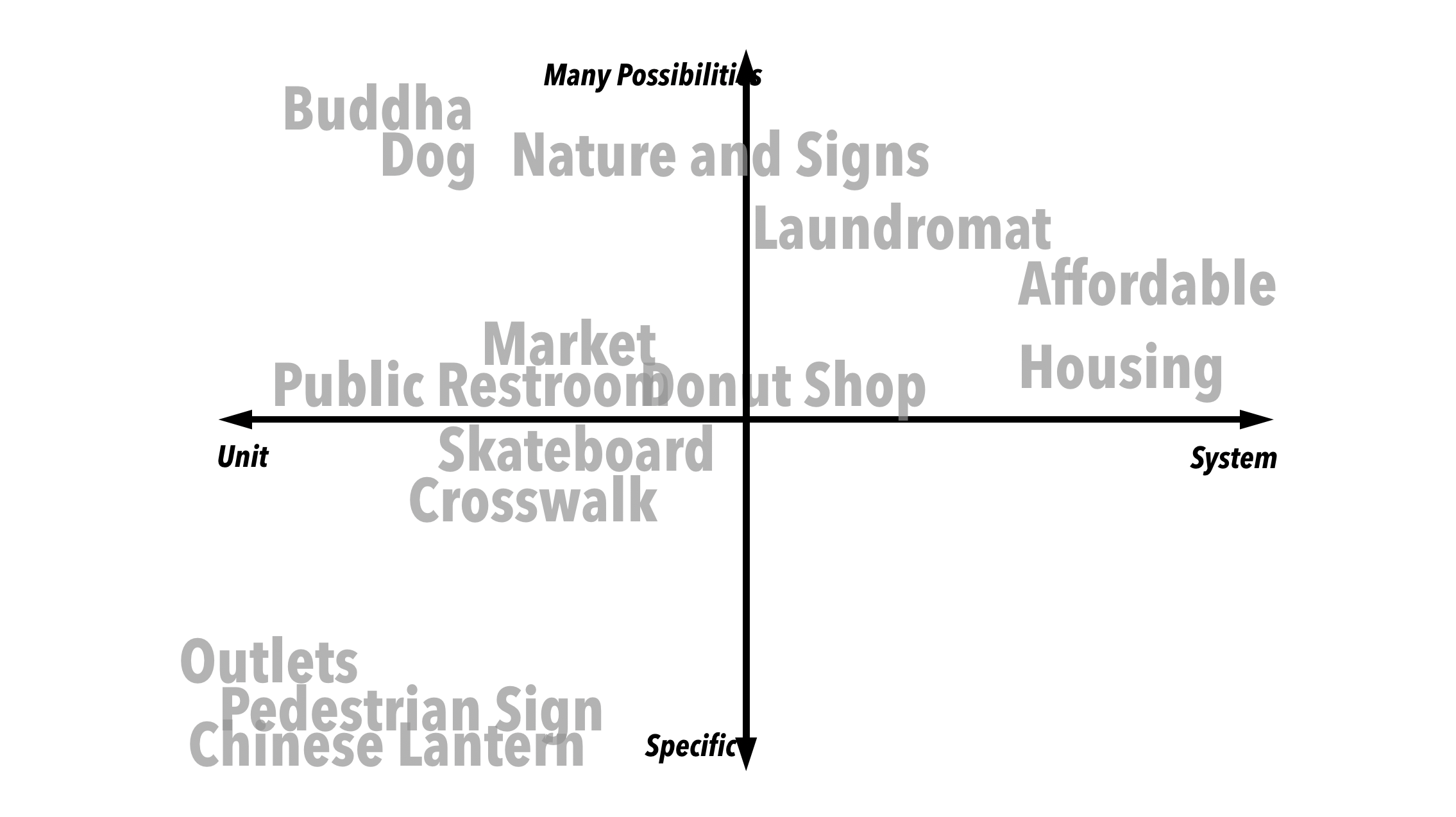

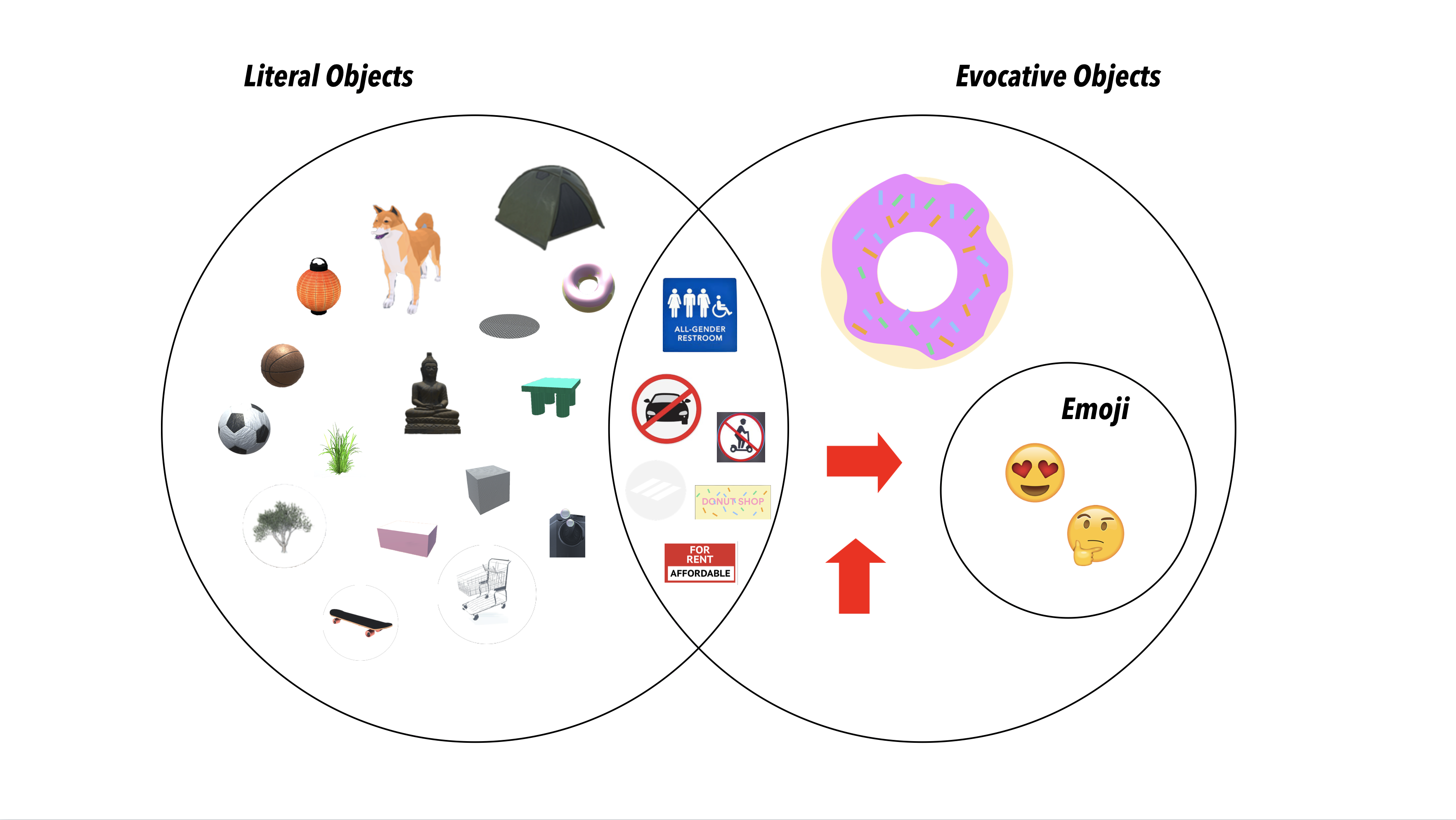

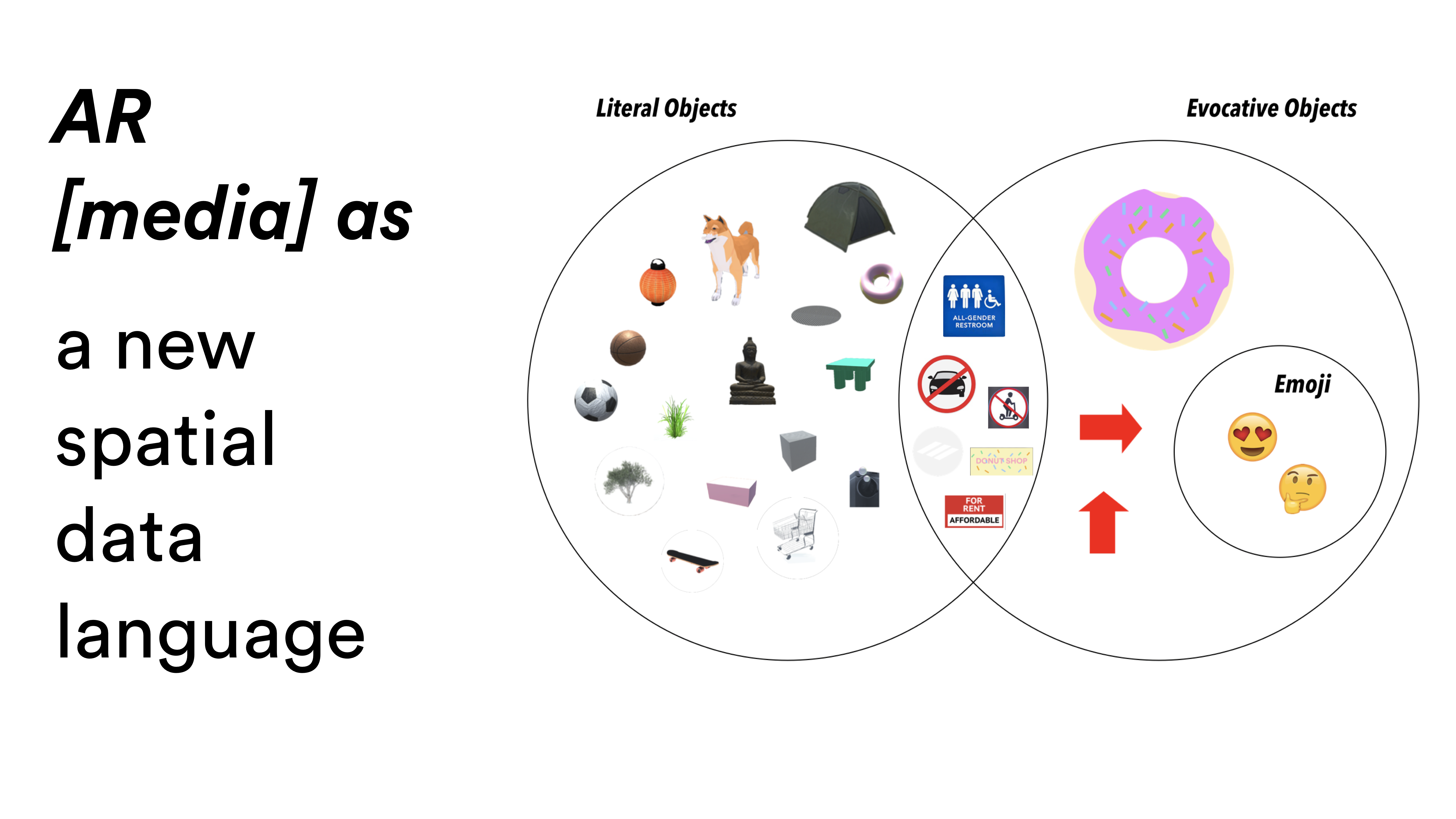

Literal, Evocative, Hybrid, and Emoji Objects

One of the affordances of AR is that due to its highly versatile visual nature, objects could range from realism, to urban graphic design (like signs we already see today), symbolic (or evocative objects that trigger ideas), and emoji’s that introduce a new meaning to space - blending emotions and sentiment communication.

Further Design Challenges

In comparison to other co-designing processes that use more analog tools (like post-it notes), this AR app had significant constraints on how people could contribute ideas:

- The AR objects that were pre-loaded into the ‘inventory chest’ of the app had to be authored by someone or a group of people

- The AR objects had their own aesthetics that might not be fully representative of what residents preferred, so ways to create customization would be a design challenge

Object Toolkit Prototype:

An interesting UI challenge was thinking about how to arrange objects so residents would have a range of options when a moment of frustration, inspiration, or proactiveness came about. A resident could create a scene, capture, geolocate this scene, share it, and other residents could ‘heart’ it if they were open to this future state. This interface featured an inventory chest that would give the resident options for placing:

- ‘found’ objects that one could capture while going around their neighborhood ( i.e. Chinese lanterns in the Plaza), natural objects (such as drought-resistant LA native trees),

- geometric objects that could visually represent form (i.e. benches),

- and options for uploading digital content (GIFs, photos).

From testing and asking residents about what they wanted, it seemed that this level of abstraction was not necessary. In fact, the final UI of this app included objects that I personally crafted or sourced from the internet, based on actual requests from residents I interviewed. After interviewing them and asking about what they wish existed or would represent their community, I would make the 3D assets, create the new build, and ask them to use it over the next three days and send me photos.

User Generated Objects & Visions

Co-Design System

With more resources and participation, the following is a flow for how these objects would be developed over time:

Explaining how the app works before installing it on resident’s phone

![]()

A Chinatown resident placing a tree on Alpine St. and Bunker Hill Ave. during a Play Walk

Final Product Features

Final Product Features

Live with Objects Over Time

and notice their simulated changes in context (i.e. a tree that grows or shifts with season)

Make New Connections

Form new social connections, whether creating relationships with strangers over a shared idea, concern, or simply being silly and imagining these futures with others in them

![]()

Experience the Future through Others’ Eyes

View objects from multiple points of view (not just literal vantage points, but others’ perspectives)

Learn Local Culture

Learn the cultural significance of objects requested by different groups in the community

Stay Updated

Be informed of the visioning discussion by reviewing weekly recaps, going back in time to see top highlights like most placed objects, most “liked” visions, resident feedback or requests for objects

Additional Testing & Outreach

Additional Testing & Outreach

After ‘completing’ my thesis, I was still eager to do more testing with different demographics, so I set up one-hour Play Walks with elderly Chinese long-term residents and local high-schoolers at a non-profit, Chinatown Service Center.

My biggest worry was whether AR technology would be too inaccessible to the elderly residents. But after a short hour of walking around with them and a translator, I found that they really enjoyed the app and had a lot of fun walking around and taking photos with the strange objects. During our walk we would bump into other seniors who were walking around, and would take part in the activity and discussion. Working with the high school students confirmed that Gen Z has really high expectations for mobile AR (this is the Pokemon Go generation, after all). They had no problems using the interface, and more importantly, understood the purpose of the activity very quickly. This enthusiasm and enjoyment of the activity, in particular, gave me lots of hope in terms of involving them in the civic process more, as most had expressed that no one was asking them what they would want in their future community.

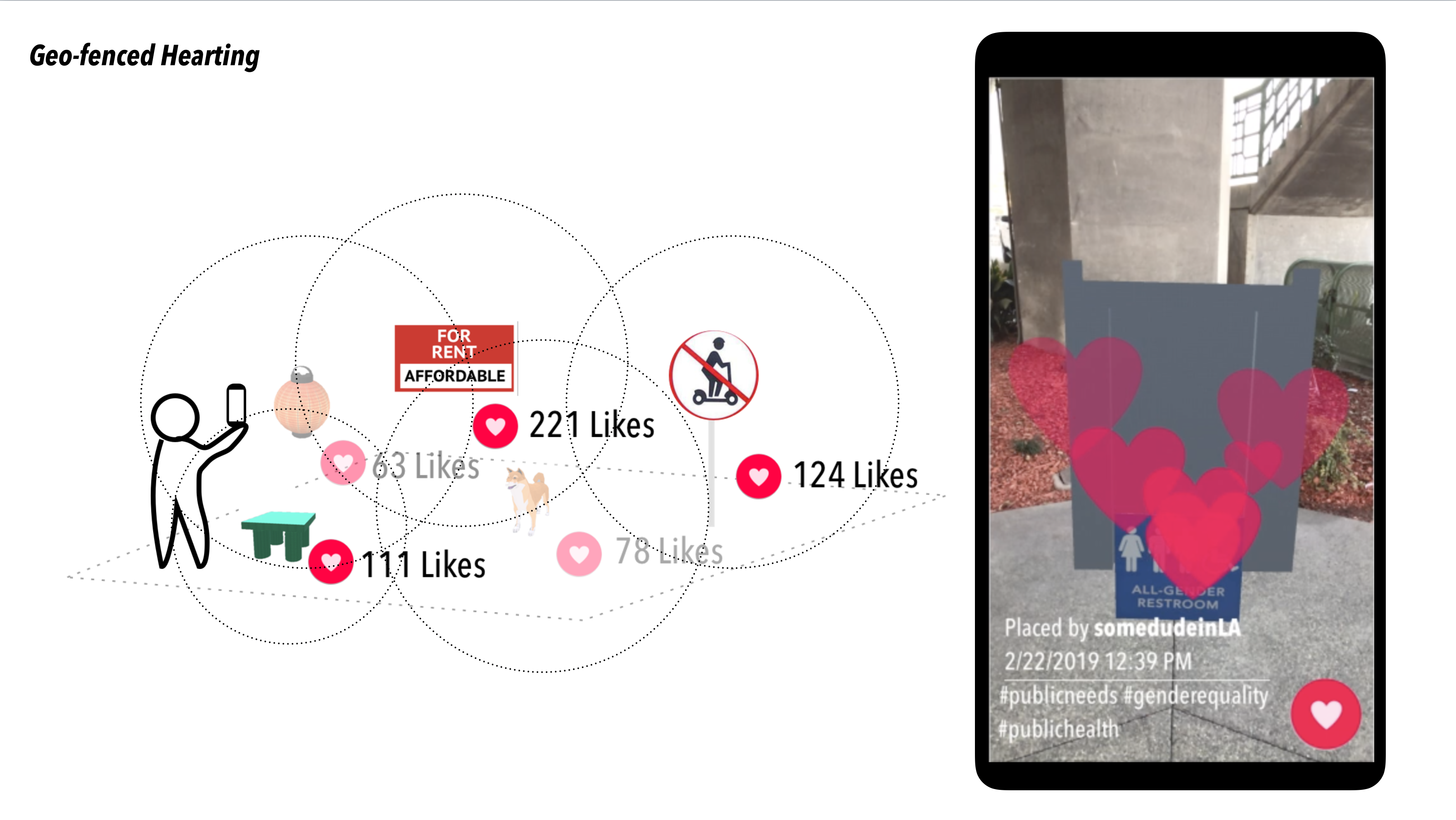

Geo-Fenced Hearting Privileging Long-Term Residents

Of the many ideas that AR has brought to mind, the most promising affordance that would make a signficant social impact is geo-fencing capabilities and designing new governance structures that give priority to long-term residents, or residents who have been historically oppressed. There’s a difference in the set of actions that residents, visitors and commuters can perform on the platform. While anyone can view visioning activity on a map and in the different locations in the neighborhood, only residents are allowed to “heart” or “dislike” visions, giving them power to have final say in what objects are featured in the next round of “Play Walks.” This feature prevents passive participation or astroturfing and enables ideologies and preferences of long-term residents to be integrated in the system before those of newer residents’, thereby slowing down the ways in which technology colonizes the urban environment.

Conclusion

The scope for this thesis was ambitious, and I learned throughout the process that it’s important for designers to know how to manage their time and resources. Dealing with technical issues of having a working app after every design tweak was the biggest learning curve, as was getting out of my comfort zone and recruiting resident participants that I didn’t know. If this project were to continue, I would definitely spend more time in the following areas:

- Have a more streamlined process for gathering input from the community on what issues, aesthetics and objects are of interest & have a more critical discussion about what’s being lost when cultural values are translated into AR

- Do more experiments with the different type of AR objects (literal, evocative, etc)

- Test or simulate weekly voting to understand the crowdsource dynamics of this app in building consensus (or not)

- Map all of the different locations, assets, and analyze to find patterns to validate whether this approach is useful to city planners, developers, or researchers

- Think through the platform governance structures & provisions

- Hire a development team so I can focus my energy on design, strategy, and execution 😂️

With greater adherence of AR as a common language among residents, coupled with greater technological progress in the AR cloud and spatial mapping, PLANNAR proposes that these objects-as-preferred-visions be used as data points to inform urban development policy recommendations, creating counter blueprints to today’s mixed use development trends and foreground the local imagination and ways of knowing.

Key Framing Outcomes

Further Research Areas

New Workflows for the Future of Making + Planning

ArtCenter Grad Show @Pasadena Convention Center

^ Magical moment

Acknowledgements:

Thesis advisors: Sean Donahue, Elizabeth Chin, W.F. Umi Hsu, Jesse Kriss, Mimi Zeiger, Tim Durfee, Elise Co

Special thanks to: Jorge Nieto, Iciar Rivera, and Tim Mok, Stanley Moy, Sophat, and the folks at Chinatown Sustainability Dialogue Group for all your help!

Thesis advisors: Sean Donahue, Elizabeth Chin, W.F. Umi Hsu, Jesse Kriss, Mimi Zeiger, Tim Durfee, Elise Co

Special thanks to: Jorge Nieto, Iciar Rivera, and Tim Mok, Stanley Moy, Sophat, and the folks at Chinatown Sustainability Dialogue Group for all your help!